Regridding with xesmf¶

The xesmf library is a dask-aware regridding library, based on the Earth System Modelling Framework’s regridder.

Let’s use it to compare CMIP6 results with ERA5. These datasets are on different grids, so we need to regrid to compare them.

We can regrid in either direction, but always keep in mind that regridding never creates new information when you go from a coarse to fine resolution.

import xarray

import xesmf

import intake

import matplotlib.pyplot as plt

import dask.distributed

dask.distributed.Client()

Client

|

Cluster

|

To start out we’ll load the data from the intake catalogue

dsd = (intake.cat.nci.esgf.cmip6.search(source_id='ACCESS-CM2', experiment_id='historical', variable_id='tas', member_id='r1i1p1f1', table_id='Amon')

.to_dataset_dict(cdf_kwargs={'chunks': {'time': 120}}))

ds_cmip = list(dsd.values())[0]

--> The keys in the returned dictionary of datasets are constructed as follows:

'project.activity_id.institution_id.source_id.experiment_id.member_id.table_id.variable_id.grid_label.version'

dsd = (intake.cat.nci.era5.search(product_type='reanalysis', year=2010, parameter='2t')

.to_dataset_dict(cdf_kwargs={'chunks': {'time': 24, 'latitude': None, 'longitude': None}}))

ds_era5 = list(dsd.values())[0]

--> The keys in the returned dictionary of datasets are constructed as follows:

'sub_collection.dataset.product_type'

I’ve chosen the chunk sizes here to be the whole grid in the lat and lon dimensions and small in the time dimension, keeping the chunk size under 100 mb. The regridder runs on the whole horizontal plane at once, so there can’t be any chunking in lat or lon

ds_cmip.tas.data

|

ds_era5.t2m.data

|

Using the xesmf regridder is simple, we just supply datasets for the source and target grids. Xesmf will automatically find the latitude and longitude dimensions, plus the bounds coordinates if available.

Since I’m just plotting the data I’ve chosen a bilinear regridding method. The options here are:

'bilinear': Bilinear interpolation'patch': Smooth interpolation'nearest_s2d': Each destination point gets the nearest source point (nearest grid point interpolation)'nearest_d2s': Each source point goes to its nearest destination point (only once, so there will be gaps if going from a coarse to fine resolution)'conservative_normed': Retains the global sum before and after regridding, handles coastlines if masked'conservative': Retains the global sum before and after regridding, may have artefacts along the coastline if masked

The conservative methods are useful in coupled models where you want to conserve the quantities being regridded. Bilinear and patch are good for visualisation. See https://pangeo-xesmf.readthedocs.io/en/latest/notebooks/Compare_algorithms.html for samples of each algorithm.

If your data is masked so that it only has valid data over certain areas (e.g. the land or ocean) you can tell xesmf this by adding a ‘mask’ variable to your dataset that is 1 in the valid areas and 0 for the grid points to ignore. A simple way to do this is

ds['mask'] = xarray.where(numpy.isfinite(var), 1, 0)

Sometimes the masks of the two datasets don’t perfectly align. In this case you can add extrap_method to the Regridder() arguments, which can be

'inverse_dist': Extrapolated points are the mean of nearby points, inversely weighted by distance'nearest_s2d': Extrapolated points are from their nearest unmasked source grid point

There are additional arguments that can be used if desired to set up the inverse_dist extrapolation, but generally the defaults are fine.

Finally, if you have a global grid let xesmf know by saying periodic=True, otherwise the edges of the grid won’t line up with each other.

regrid_cmip_to_era5 = xesmf.Regridder(ds_cmip, ds_era5, method='bilinear', periodic=True)

The regridding is Dask-aware, so you can give it a large dataset and it will only compute the time-slices you actually use when you make a plot or save the result to a file

tas = regrid_cmip_to_era5(ds_cmip.tas)

tas

/g/data3/hh5/public/apps/miniconda3/envs/analysis3-21.01/lib/python3.8/site-packages/xesmf/frontend.py:464: FutureWarning: ``output_sizes`` should be given in the ``dask_gufunc_kwargs`` parameter. It will be removed as direct parameter in a future version.

dr_out = xr.apply_ufunc(

<xarray.DataArray 'tas' (time: 1980, latitude: 721, longitude: 1440)>

dask.array<transpose, shape=(1980, 721, 1440), dtype=float64, chunksize=(120, 721, 1440), chunktype=numpy.ndarray>

Coordinates:

* time (time) datetime64[ns] 1850-01-16T12:00:00 ... 2014-12-16T12:00:00

height float64 ...

* longitude (longitude) float32 -180.0 -179.8 -179.5 ... 179.2 179.5 179.8

* latitude (latitude) float32 90.0 89.75 89.5 89.25 ... -89.5 -89.75 -90.0

Attributes:

regrid_method: bilinear- time: 1980

- latitude: 721

- longitude: 1440

- dask.array<chunksize=(120, 721, 1440), meta=np.ndarray>

Array Chunk Bytes 16.45 GB 996.71 MB Shape (1980, 721, 1440) (120, 721, 1440) Count 69 Tasks 17 Chunks Type float64 numpy.ndarray - time(time)datetime64[ns]1850-01-16T12:00:00 ... 2014-12-...

- bounds :

- time_bnds

- axis :

- T

- long_name :

- time

- standard_name :

- time

array(['1850-01-16T12:00:00.000000000', '1850-02-15T00:00:00.000000000', '1850-03-16T12:00:00.000000000', ..., '2014-10-16T12:00:00.000000000', '2014-11-16T00:00:00.000000000', '2014-12-16T12:00:00.000000000'], dtype='datetime64[ns]') - height()float64...

- units :

- m

- axis :

- Z

- positive :

- up

- long_name :

- height

- standard_name :

- height

array(2.)

- longitude(longitude)float32-180.0 -179.8 ... 179.5 179.8

- units :

- degrees_east

- long_name :

- longitude

array([-180. , -179.75, -179.5 , ..., 179.25, 179.5 , 179.75], dtype=float32) - latitude(latitude)float3290.0 89.75 89.5 ... -89.75 -90.0

- units :

- degrees_north

- long_name :

- latitude

array([ 90. , 89.75, 89.5 , 89.25, 89. , 88.75, 88.5 , 88.25, 88. , 87.75, 87.5 , 87.25, 87. , 86.75, 86.5 , 86.25, 86. , 85.75, 85.5 , 85.25, 85. , 84.75, 84.5 , 84.25, 84. , 83.75, 83.5 , 83.25, 83. , 82.75, 82.5 , 82.25, 82. , 81.75, 81.5 , 81.25, 81. , 80.75, 80.5 , 80.25, 80. , 79.75, 79.5 , 79.25, 79. , 78.75, 78.5 , 78.25, 78. , 77.75, 77.5 , 77.25, 77. , 76.75, 76.5 , 76.25, 76. , 75.75, 75.5 , 75.25, 75. , 74.75, 74.5 , 74.25, 74. , 73.75, 73.5 , 73.25, 73. , 72.75, 72.5 , 72.25, 72. , 71.75, 71.5 , 71.25, 71. , 70.75, 70.5 , 70.25, 70. , 69.75, 69.5 , 69.25, 69. , 68.75, 68.5 , 68.25, 68. , 67.75, 67.5 , 67.25, 67. , 66.75, 66.5 , 66.25, 66. , 65.75, 65.5 , 65.25, 65. , 64.75, 64.5 , 64.25, 64. , 63.75, 63.5 , 63.25, 63. , 62.75, 62.5 , 62.25, 62. , 61.75, 61.5 , 61.25, 61. , 60.75, 60.5 , 60.25, 60. , 59.75, 59.5 , 59.25, 59. , 58.75, 58.5 , 58.25, 58. , 57.75, 57.5 , 57.25, 57. , 56.75, 56.5 , 56.25, 56. , 55.75, 55.5 , 55.25, 55. , 54.75, 54.5 , 54.25, 54. , 53.75, 53.5 , 53.25, 53. , 52.75, 52.5 , 52.25, 52. , 51.75, 51.5 , 51.25, 51. , 50.75, 50.5 , 50.25, ... -52. , -52.25, -52.5 , -52.75, -53. , -53.25, -53.5 , -53.75, -54. , -54.25, -54.5 , -54.75, -55. , -55.25, -55.5 , -55.75, -56. , -56.25, -56.5 , -56.75, -57. , -57.25, -57.5 , -57.75, -58. , -58.25, -58.5 , -58.75, -59. , -59.25, -59.5 , -59.75, -60. , -60.25, -60.5 , -60.75, -61. , -61.25, -61.5 , -61.75, -62. , -62.25, -62.5 , -62.75, -63. , -63.25, -63.5 , -63.75, -64. , -64.25, -64.5 , -64.75, -65. , -65.25, -65.5 , -65.75, -66. , -66.25, -66.5 , -66.75, -67. , -67.25, -67.5 , -67.75, -68. , -68.25, -68.5 , -68.75, -69. , -69.25, -69.5 , -69.75, -70. , -70.25, -70.5 , -70.75, -71. , -71.25, -71.5 , -71.75, -72. , -72.25, -72.5 , -72.75, -73. , -73.25, -73.5 , -73.75, -74. , -74.25, -74.5 , -74.75, -75. , -75.25, -75.5 , -75.75, -76. , -76.25, -76.5 , -76.75, -77. , -77.25, -77.5 , -77.75, -78. , -78.25, -78.5 , -78.75, -79. , -79.25, -79.5 , -79.75, -80. , -80.25, -80.5 , -80.75, -81. , -81.25, -81.5 , -81.75, -82. , -82.25, -82.5 , -82.75, -83. , -83.25, -83.5 , -83.75, -84. , -84.25, -84.5 , -84.75, -85. , -85.25, -85.5 , -85.75, -86. , -86.25, -86.5 , -86.75, -87. , -87.25, -87.5 , -87.75, -88. , -88.25, -88.5 , -88.75, -89. , -89.25, -89.5 , -89.75, -90. ], dtype=float32)

- regrid_method :

- bilinear

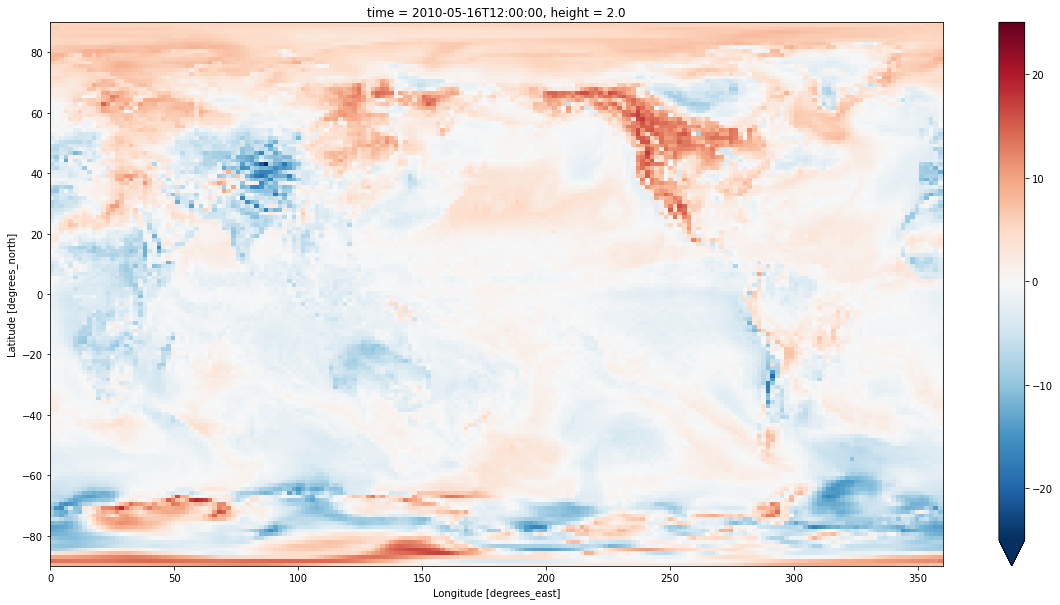

We can now compare the two datasets. Here I’ve done the regrid from the coarse ACCESS-CM2 grid to the fine ERA5 grid. It looks nice, but most of the fine detail is just coming from the ERA5 data, since it uses a 0.25 degree grid. When analysing the data you wouldn’t want to look at scales any smaller than the coarse grid - about 1.5 degrees in this case.

delta_era5_grid = ds_era5.t2m.sel(time='2010-05-16T00:00') - tas.sel(time='2010-05-16', method='nearest')

plt.figure(figsize=(20,10))

delta_era5_grid.plot(vmax=25)

<matplotlib.collections.QuadMesh at 0x7f2c92c98760>

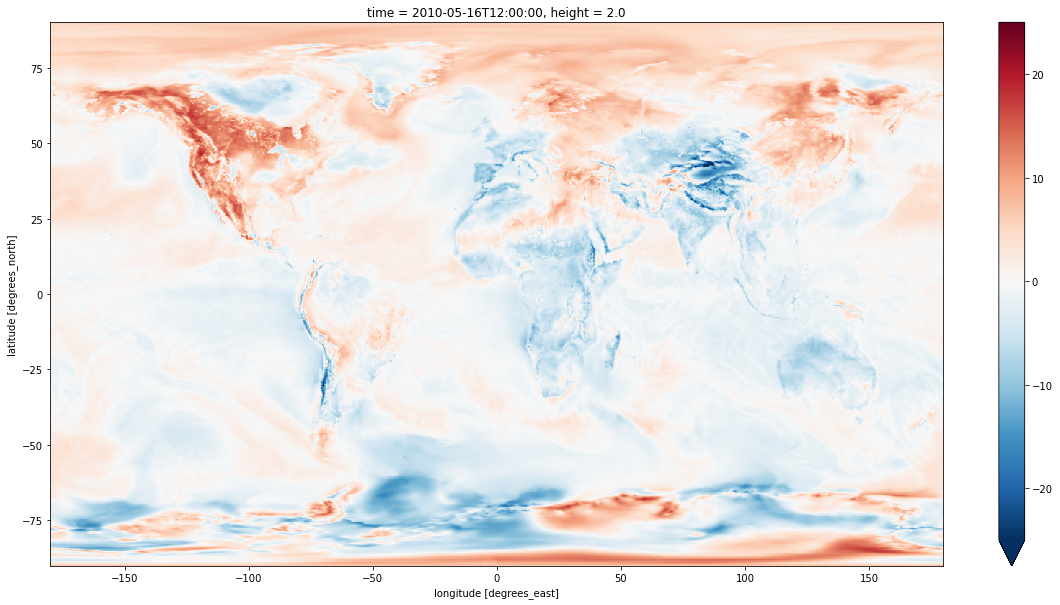

Regridding in the other direction works exactly the same way. The result is consistent with the high resolution grid, although a bit blockier because of the coarser resolution. The useful information is the same in both directions, going from fine to coarse just makes this more explicit.

regrid_era5_to_cmip = xesmf.Regridder(ds_era5, ds_cmip, method='bilinear', periodic=True)

t2m = regrid_era5_to_cmip(ds_era5.t2m)

/g/data3/hh5/public/apps/miniconda3/envs/analysis3-21.01/lib/python3.8/site-packages/xesmf/frontend.py:464: FutureWarning: ``output_sizes`` should be given in the ``dask_gufunc_kwargs`` parameter. It will be removed as direct parameter in a future version.

dr_out = xr.apply_ufunc(

delta_cmip5_grid = t2m.sel(time='2010-05-16T00:00') - ds_cmip.tas.sel(time='2010-05-16', method='nearest')

plt.figure(figsize=(20,10))

delta_cmip5_grid.plot(vmax=25)

<matplotlib.collections.QuadMesh at 0x7f2c7ac96490>